Introduction:

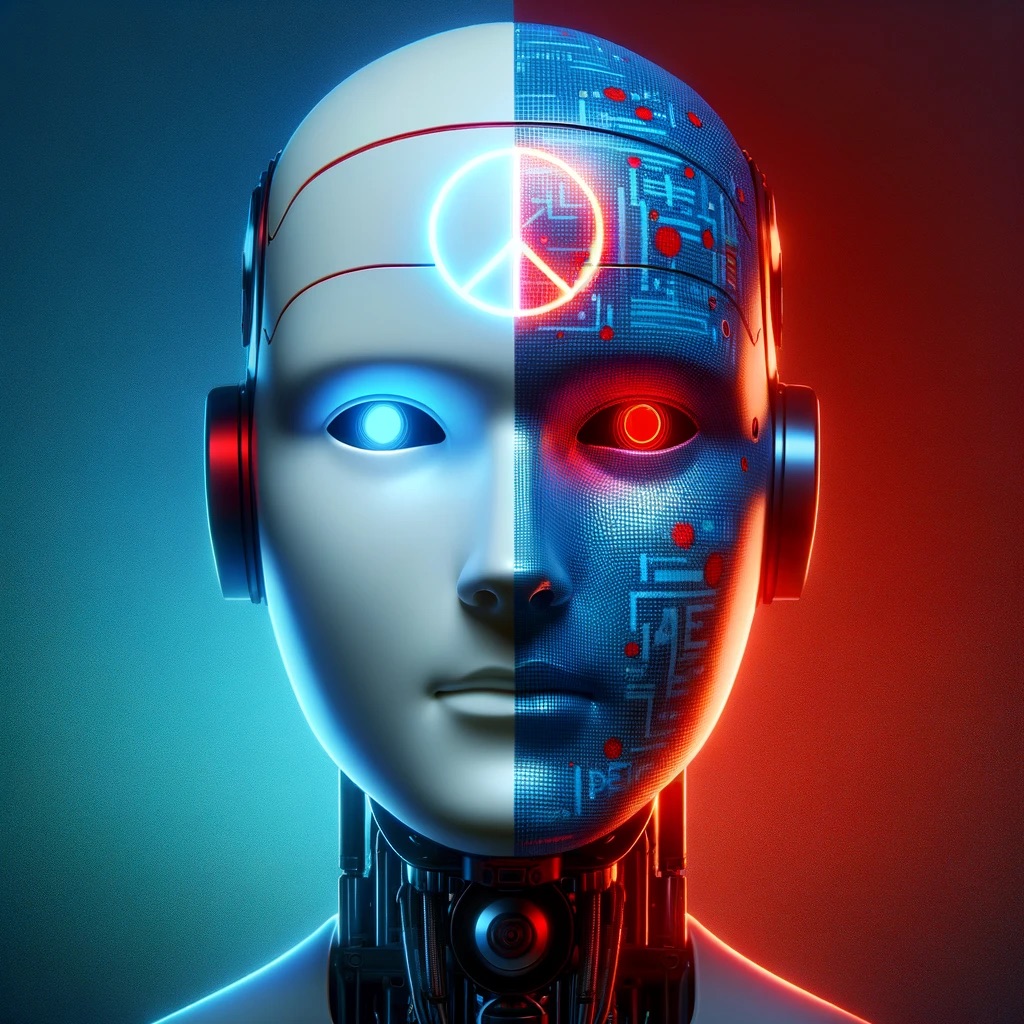

In an era where technology and artificial intelligence (AI) are advancing at an unprecedented pace, a pressing ethical question emerges: Should robots be programmed with the capability to kill, if such actions could potentially save human lives? This inquiry not only challenges our technological capabilities but also delves deep into moral and ethical territories.

The Case for Training Robots:

Proponents of training robots for lethal capabilities argue from a utilitarian perspective. In scenarios like hostage situations, war zones, or terrorist threats, robots equipped with lethal force could theoretically intervene with greater precision and less risk to innocent lives. The argument hinges on the belief that AI, devoid of human emotions, can make more rational and less biased decisions in life-threatening situations.

Ethical Concerns:

However, the ethical implications are profound and multifaceted. The primary concern revolves around the value of human judgment and moral responsibility. Can an AI truly understand the value of human life? The delegation of life-and-death decisions to machines raises questions about accountability, especially in the event of errors or unintended casualties.

International Perspective and Legal Framework:

Globally, there is no consensus on this issue. Some countries advocate for strict regulations or outright bans on autonomous weapons systems, while others invest in robotic military technology. The lack of a unified legal framework governing the use of lethal AI creates a grey area that could lead to international disputes and ethical quandaries.

Technological Limitations and Risks:

The current state of AI technology is another critical aspect. AI systems, as of now, lack the nuanced understanding of human ethics and contexts. They operate based on algorithms and data, which can be flawed or biased. The risks of malfunction, hacking, or misuse add layers of complexity to the debate.

Conclusion:

The question of whether robots should be trained to kill, even if it could save lives, remains a deeply polarizing issue. It’s not just a matter of technological capability, but also of ethical, legal, and moral considerations. As AI continues to evolve, so too must our discussions and frameworks around its use in life-and-death scenarios.

Call to Action:

We invite readers to join the conversation. What are your thoughts on this crucial topic? Share your views and help shape the discourse around the ethical use of AI in our society.

Leave a Reply